In 2025, artificial intelligence (AI) has offered solid support to many businesses in software development. 47% of developers use AI tools daily, according to Stack Overflow. On the one hand, it reflects the rapid adoption of this technology; on the other hand, developers remain cautious about using it, especially in complex stages of software development.

Stack Overflow Developer Survey 2025 revealed strong skepticism about letting AI handle high-stakes tasks:

- 72% of professional developers do not engage in vibe coding.

- 76% of developers say they don’t plan to use AI for deployment or monitoring.

- 44% of developers do not plan to use AI for testing their code.

In other words, when it comes to tasks that could be catastrophic, like writing new code for a product or mapping out an entire project, a large majority of developers still want a human to manage and be in charge.

We want to offer our expert opinion, backed by our own experience in the field. AI in the testing process accelerates the software delivery without compromising quality. For both clients and developers, this trend should be reassuring. Software development teams that integrate AI into their workflows and QA pipelines have reported significantly faster release times, with 30–40% faster cycles. Let’s weigh the pros and cons of using AI in software testing to enhance your development process.

What Are the Common Applications of AI in Test Automation?

Software systems grow more complex, and traditional test automation struggles to keep up with the speed and scale of software product delivery. Let’s explain why, actually, using artificial intelligence (AI) is not about replacing QA teams but about enhancing their performance and efficiency.

What are the most widely used AI applications in test automation, including API testing, performance analysis, visual recognition, and intelligent test data generation?

Performance testing process |

AI tools analyze system performance metrics in real-time to forecast potential bugs and disruptions before they become critical. Predictive insight enables developers to eliminate issues early in the development process and, if necessary, improve the overall responsiveness and scalability of applications. |

API testing |

AI can analyze sets of rules and API logs to generate test cases automatically that verify errors and how different systems interact. It also helps simulate cross-browser and cross-device functioning conditions to ensure compatibility. |

Visual locators and computer vision |

AI algorithms use computer vision and visual recognition techniques to identify and interact with interface elements. Advanced AI systems also use OCR (optical character recognition) to scan screens, detect visual regressions, and validate changes in layout or user interface (UI). |

AI-driven analytics for test results |

Automated tests generate vast amounts of data. AI structures and analyzes results to identify patterns, classify defects, and differentiate the outcomes. As a result, QA teams gain clarity from test reports, leading to improved performance and accelerated product launches. |

Natural Language Test Generation |

Natural language processing enables AI to interpret requirements, user stories, and bug reports or generate structured test cases. It allows comparing business requirements and technical test implementation, making the process faster and resulting in valuable outcomes. |

Debunking Myths About AI in Software Testing

Several myths and misconceptions surround the use of AI tools for software testing. Let’s prove the reality behind the most common myths:

Myth 1: “AI will replace QA engineers.”

Reality: AI can automate repetitive or time-consuming tasks (e.g., test generation or log analysis), but, as research shows, it cannot replace human QA engineers’ critical thinking, creativity, or domain expertise. AI functions more as a personal assistant that supports testers. QA experts are essential for designing effective test strategies, interpreting results, assessing risk, and deciding what requires context and insight.

Myth 2: “AI doesn’t make mistakes.”

Reality: Generative AI can “hallucinate” or produce incorrect or misleading information. As a QA tester, you need to understand your application’s business logic and context. Stack Overflow research indicates that developers don’t blindly trust AI outputs. Experts double-check test results and validate AI suggestions before relying on them. Consider AI responses as useful drafts or hints and constantly review them with a critical eye.

Myth 3: “You need deep machine learning expertise to use AI in testing.”

Reality: Most modern AI testing tools are designed to be user-friendly for testers and developers. It means you don’t have to be a data scientist or have any ML background. You need a solid understanding of testing and writing skills to write coherent prompts or specifications for the AI. In the real workflow, using AI in software testing involves either submitting a request or integrating an AI-powered platform into your existing workflow.

Myth 4: “AI will make testing 100% automated.”

Reality: AI can automate certain testing activities, but an expert manages the whole testing process. AI can generate intelligent test cases, but a QA specialist can make inquiries. He is also responsible for prioritizing tests, interpreting ambiguous results, and understanding the user perspective.

Myth 5: “AI tools are unreliable.”

Reality: AI tools are becoming more reliable with each iteration; they require understanding the workflow. The effectiveness of AI-driven testing tools depends largely on how clearly we define use cases and validation processes. If you use an AI tool with a well-defined scope (for example, to generate test data or to analyze logs) and you validate its outputs, it can be pretty dependable. Issues typically arise when tools are misused or when the provided instructions are ambiguous.

As Our review of some myths shows that QA engineers manage the process by using AI to work more effectively and reduce their workload.

Where AI Adds Value in the Software Testing Lifecycle

AI can assist at all stages of the Software Testing Life Cycle (STLC): test planning, test analysis, test design, test implementation, test execution, and test completion. We aim to highlight the contributions of AI to each stage of the Software Testing Life Cycle:

AI can assist at all stages of the Software Testing Life Cycle (STLC): test planning, test analysis, test design, test implementation, test execution, and test completion. We aim to highlight the contributions of AI to each stage of the Software Testing Life Cycle:

| STLC Stage | How AI Adds Value | QA Engineer Role |

| Test Planning | AI analyzes project data history (requirements, defect logs, and test results) to identify high-risk areas or modules that need greater testing focus. It can suggest test coverage outlines or prioritize testing based on risk. For example, any generative AI tool can summarize requirements or list potential risk scenarios from a specification.

– Suggests test coverage areas based on historical data |

Define business goals, strategies, validate risks, and align scope with product requirements and overall project specifics. |

| Test Analysis | AI tools using Natural language processing can parse requirement documents, specifications, or user stories to identify ambiguities, missing details, or inconsistencies. For instance, an AI assistant can highlight unclear requirements that need clarification.

– Parses requirement documents to highlight missing or unclear details |

Understands domain-specific logic and project or business risks.

Manages strategic planning based on professional skills, priorities, and stakeholder communication. |

| Test Design | An AI can create a set of positive and negative test cases that cover different scenarios for a given user story.

– Generates template-based test cases and test data (e.g., boundary values) |

Deep understanding of business flows and critical use cases.

Reviews edge cases and refines scenarios. Validation of the outcomes requires project context and technical expertise. Designing test strategy and clear, structured, and traceable test cases |

| Test Implementation | When testers describe their testing requirements in a prompt, the AI can automatically generate code snippets or complete test scripts. It also supports self-healing tests, change detection, and automatic script updates to keep tests running smoothly.

– Creates automation code snippets for UI/API tests |

Set up environments and prioritize test coverage based on risk and business needs. |

| Test Execution | AI optimizes the test suite and analyzes the latest code changes and past test results to select only the tests that are most likely to fail.

It can also identify visual or UI changes using computer vision techniques. – Detects UI and visual changes using computer vision – help with root cause analysis of the defect |

Manages the overall validation process if the software meets requirements before its release. |

| Test Completion | AI provides a detailed summary or specifies the bug report and enhances its quality by clarifying language and including all required information. Another area is retrospective analysis, where AI can analyze all bugs after a release and identify patterns to improve processes.

– Generates test reports, log summaries, and bug templates |

All in all, final insights still require human interpretation. Lead retrospectives, derive process improvements, and communicate quality insights to teams. |

Risks and Limitations in Testing

While AI offers many benefits, it also introduces new risks and limitations that quality assurance (QA) teams need to manage. You should also understand key concerns when using AI for testing:

Inaccuracy and Hallucination: AI-powered test generation may produce outputs that appear proper but are actually incorrect or misleading. This phenomenon is known as hallucination. In a testing context, the term might mean an AI-generated test case that doesn’t actually validate the requirement or an AI analysis that incorrectly labels a feature as risky or, conversely, as bug-free.

If you blindly accept AI-generated outputs, such inaccuracies could result in gaps in testing or lead to false assumptions about quality. QA engineers must always validate AI-generated outputs (test cases, summaries, etc.) before using them.

Data Privacy and Security: Many AI tools, particularly cloud-based services, may require sending data to third-party servers for processing, raising concerns when using sensitive project or production data in prompts or for test generation.

This raises concerns about the need to use sensitive project or production data in your prompts or test generation. For example, uploading real user data to an AI service could violate privacy policies or expose confidential information. There is also a risk that AI tools may store or log the information you input. The QA team must follow strict data-handling policies to prevent the unintentional leakage of confidential data.

Bias and Ethical Issues: AI models might get biases from the data they learn from. In testing, this could cause the AI to suggest unfair tests. For example, it might only pay attention to certain types of inputs or user profiles, while ignoring others. QA Engineers need to be aware of these possibilities and ensure test coverage is fair and complete across all user groups, avoiding these biases from negatively impacting quality outcomes.

Overreliance on AI: As AI handles more testing tasks, there is a risk that engineers may become over-dependent on it. If QA engineers start trusting AI’s results without understanding or verifying them, they risk losing their critical thinking and manual testing skills.

“Vibe Coding” and Skill Degradation: An emerging trend is “vibe coding,” in which developers or testers accept AI-generated code or answers without fully verifying them. In a QA context, “vibe testing” could mean using AI to write test scripts or perform analysis and simply trusting that it’s correct because it looks right. The risk is that QA engineers may become passive executors, blindly following AI outputs. They might skip the deep understanding of the system or the code, leading to a loss of expertise over time.

Benefits of How AI Changes Software Testing

A primary benefit of using AI in software testing is enabling faster product launches. By using AI tools and techniques effectively and in the proper sequence throughout development and testing, teams reduce time on repetitive testing tasks and traditional automated testing cycles and improve coverage and debugging.

- Software Development Early Stages with AI: The acceleration process starts early in the development lifecycle. In the requirements and design stage, AI can be used to validate and clarify requirements and quickly analyze user stories. It can also generate quick prototypes or simulate user flows to gather early feedback.

- Test Design and Automation: Teams can use AI to suggest relevant test scenarios and automatically generate the necessary scripts, without manually writing extensive test cases or automation scripts for each new feature. AI assistance compresses tasks that typically take days, such as writing all necessary tests for a complex feature, into hours.

- Optimized Testing Cycles: AI-powered test automation platforms also allow teams to run multiple tests simultaneously, reducing overall testing time and enabling faster release cycles. All those options help developers receive feedback more quickly, which in turn leads to faster bug fixes and an earlier product release.

- Better Coverage: AI generates unique scenarios and uses machine learning to provide inputs, expanding the testing scope. It becomes easier to catch bugs that might have otherwise gone unnoticed and simulate production usage patterns, which aligns testing more closely with real-world scenarios.

- Accelerated Debugging: AI tools can automate log analysis, freeing developers and testers from hours spent combing through logs to identify the root cause of a failure. If a test in the CI pipeline fails, an AI assistant can immediately highlight the specific commit that introduced the defect.

Developers get detailed defect reports and can fix issues faster. In essence, AI reduces the time required to diagnose and fix bugs, facilitates communication between development and testing teams, and helps avoid long-term debugging sessions.

AI Prompting Algorithm for QA

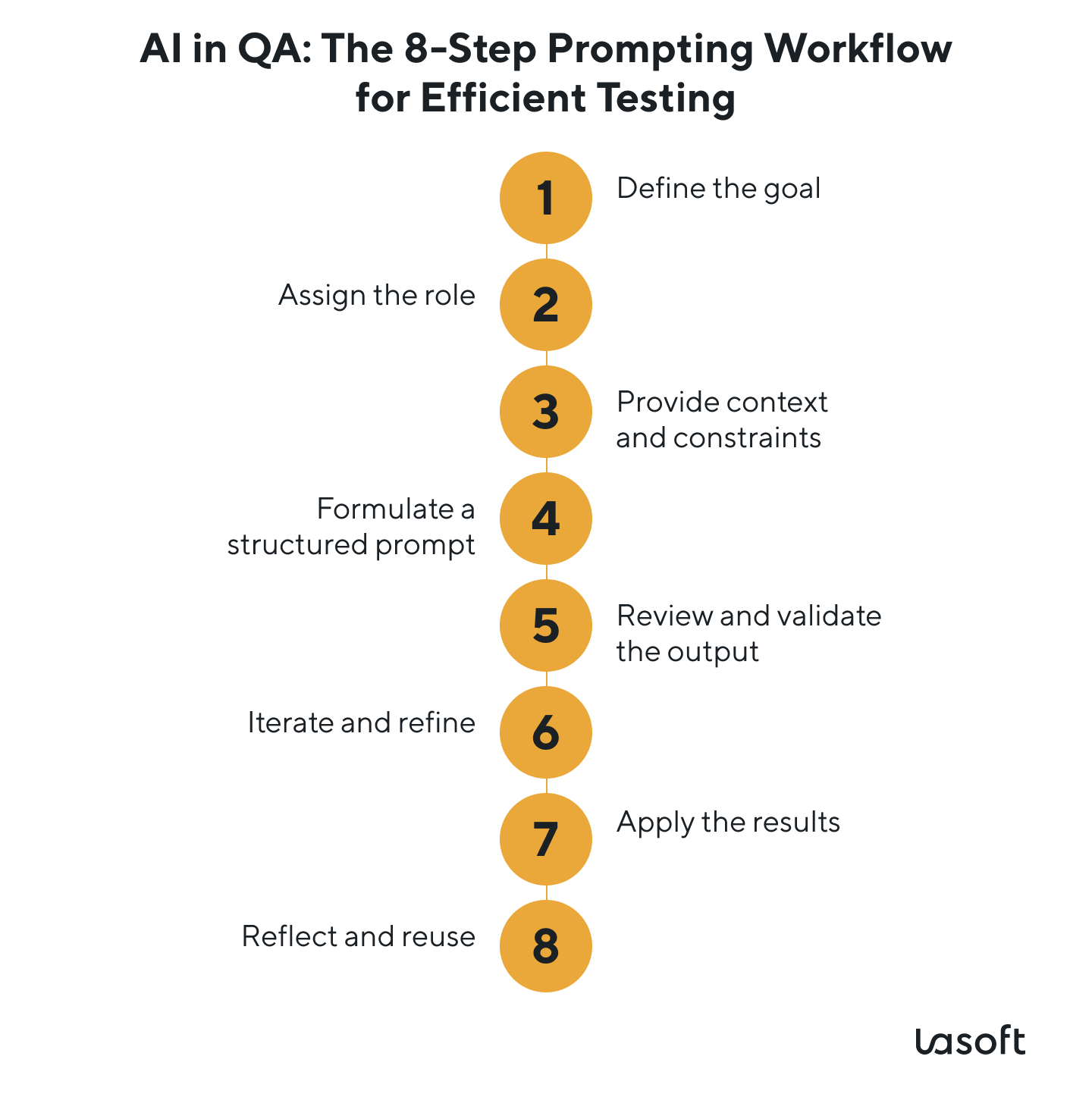

The quality of output from AI tools, such as ChatGPT or Copilot, depends on the way you formulate your requests or tasks. Many experts take courses to become certified prompt engineers because creating proper prompts is a valuable skill. Here is your 8-step prompting strategy to help QA engineers interact with AI systematically:

| Steps | Advise | Example |

| 1. Define the Goal | Clearly define what you want from the AI, specifying the problem or task you want it to address. | “I need to make five boundary-value test cases for the login function.” By stating the desired outcome, such as boundary-value test cases for login, you provide the AI with a clear target. |

| 2. Assign the Role | You can instruct the AI to try on the role of a QA engineer or a test automation expert. This sets the context for the expertise’s scope and tone; defining a role guides the AI in crafting appropriate answers. | “Act as a Senior QA Engineer specialized in security testing.” The AI’s response will aim to reflect how an expert tester might think. Setting the role ensures the suggestions are relevant to testing practices. |

| 3. Provide Context and Constraints | Provide AI with context, background, and constraints or criteria. For a QA task, the context might include the feature description, requirements, user story, acceptance criteria, and the tech stack. Also, mention any constraints, such as “only use English,” “include a detailed list of issues,” or “adhere to the company’s format for test cases.” The more relevant details you provide, the more accurate and grounded the AI’s response will be. | If the feature is a login form, provide the user story and acceptance criteria, or if you, as a tester, only want certain types of tests, say so (e.g. “focus on edge cases around input validation”). |

| 4. Formulate a Structured Prompt | Structure your prompt or question clearly, sometimes in steps or bullet points, and if needed, specify the output format. A structured prompt guides the AI to produce a structured response. | “Generate test cases in a table with columns: Test Scenario, Test Steps, Expected Result.” |

| 5. Review and Validate the Output | Check whether the test cases or the AI-generated analysis are coherent, and identify any missing or incorrect information. If the AI suggested something unusual, such as “hallucinating” a requirement that does not actually exist, you should consider it. At this step, you are validating that the output meets your goal and is actually correct. | |

| 6. Iterate and Refine | Often, the first AI answer isn’t perfect, but if you find that the information is incorrect, refine your prompt and ask follow-up questions. Provide feedback or additional information to the AI and ask it to improve. | “Consider X scenario as well,” or “Expand on this particular point,” or even rephrase the original question, including details for clarity. |

| 7. Apply the Results | Once you are satisfied with the AI’s output, apply it to your workflow by adding the generated test cases to your test suite. Ensure the AI-generated content is formatted correctly for your needs and integrates seamlessly with your existing documentation or automation scripts. | If the AI provided test ideas, you might now write them as formal test cases in your test management tool. Always double-check that it fits your project’s standards and environment. The key is to make the AI output actionable in your QA process. |

| 8. Reflect and Reuse | After using the AI’s output, take a moment to learn from the experience. Note which prompts yielded proper results and what needed tweaking. Build a library of effective prompts for future use. |

The Evolving Role of QA Engineers

As AI takes over many routine testing tasks, the role of the QA engineer is transforming and leads to becoming a strategic quality advisor and analyst:

From Test Executor to Quality Strategist:

With AI-assisted options, much of the execution and even test generation can be automated. This allows you, as a QA professional, to focus on test strategy and design at a higher level.

AI as a QA Assistant to Support: The QA engineer reviews and manages how the AI drafts test cases. The value a QA engineer adds lies in their ability to interpret data and provide context, while the AI handles processing large amounts of data and performs routine verifications.

Becoming an AI-driven Analyst: QA engineers are expanding their skill sets into data analysis, domain expertise, and machine learning. They interpret AI outputs (with analytics dashboards and failure pattern reports).

Continuous Learning and Adaptation: AI tools and techniques are still emerging and improving, which means QA engineers need to learn continuously. This might involve learning to write better prompts, understanding how to validate AI outputs, and staying up to date on new AI features and platforms.

Focus on Higher-Value Tasks: QA engineers can focus on communication, for example, working closely with developers and product owners to discuss risks and mitigations, which require emotional intelligence and negotiation skills that AI lacks. The focus of QA testing shifts from personal testing to ensuring its proper execution. As an expert in the field, inform the team that the product is ready, identify remaining risks, and guide software improvements.

Conclusion

Our article helps us understand that, by intelligently assisting with repetitive tasks, surfacing insights from massive datasets, and enabling faster, smarter decision-making, AI becomes an assistant to any expert throughout the software development lifecycle. For teams looking to maintain automated tests and accelerate delivery without sacrificing reliability, AI changes the software testing process, making it both efficient and scalable.